Collective Note: We’re opening the notebook early. Twelve Balloons is where we explore what happens when interfaces start listening and responding. This space will collect our prototypes, reflections, and the occasional provocation as we design interfaces that talk.

So We Built This Thing

There wasn’t a grand plan. Just a feeling. We work with AI, but we didn’t want to build another chatbot. Well… we kind of already did. Our Twelve Balloons chatbot exists, but that’s a story for another post. This time, we were after something else. Something you could feel. Something responsive. Designed with care. And, above all, something useful.

So we started with music. We’ve always cared about it, all kinds, all moods. It felt like the right place to begin.

Now, does the world need yet another way to play “Espresso Macchiato”? Probably not. But imagine calling up a song just by saying it in a conversation. Not searching. Or better yet, imagine a system that understands what you’re already talking about and starts the right track without you even asking.

That’s the shift we were curious about. It led us to a bigger thought:

What if conversation wasn’t just the extra layer?

What if it was the interface itself?

Technically neat, but not the point

Where it actually lives

Our Spotify Agent isn’t a product. It’s not even standalone. When you use it, you’re inside the Twelve Balloons chatbot interface. That’s the whole idea.

You log in with a button, right there in our chatbot. The browser opens up Spotify’s own login flow. OAuth handles permissions directly.

Under the Hood

Let’s get something out of the way. This isn’t a technical breakthrough. It’s just a working UI that feels right. We’re not claiming it’s a new protocol. It’s a tool call. It behaves a bit like MCP, but there’s no official integration, and Spotify doesn’t offer one anyway.

Say I ask it to play a song by, let’s say, Bob Marley. The agent queries Spotify, gets a list of tracks, grabs the top result by ID, and sends the play command.

No scrolling, no clicking, no typing out track names. You just ask, and it plays on the device where you’re logged in. There’s a caveat though: Spotify has its own rules. It only plays music when the player is active and in the foreground. If it’s not, nothing happens. So we had to work around that. Not ideal, but fair enough.

The UI is the thing

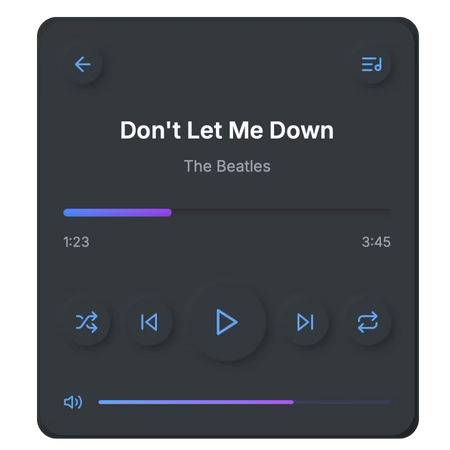

Whether it’s a tool call, an MCP instruction, or some other framework, it’s all background noise. What matters is the feel. The responsiveness. The way the screen responds to your sentence and the way the button shifts when a track starts.

Everything happens inside the conversation. No extra layers. No clutter. Just a clear action and a fast reply. Something that behaves the way you expect it to.

That’s where we’re aiming.

That’s what makes it interesting.

And honestly, we think that’s the beauty of it.

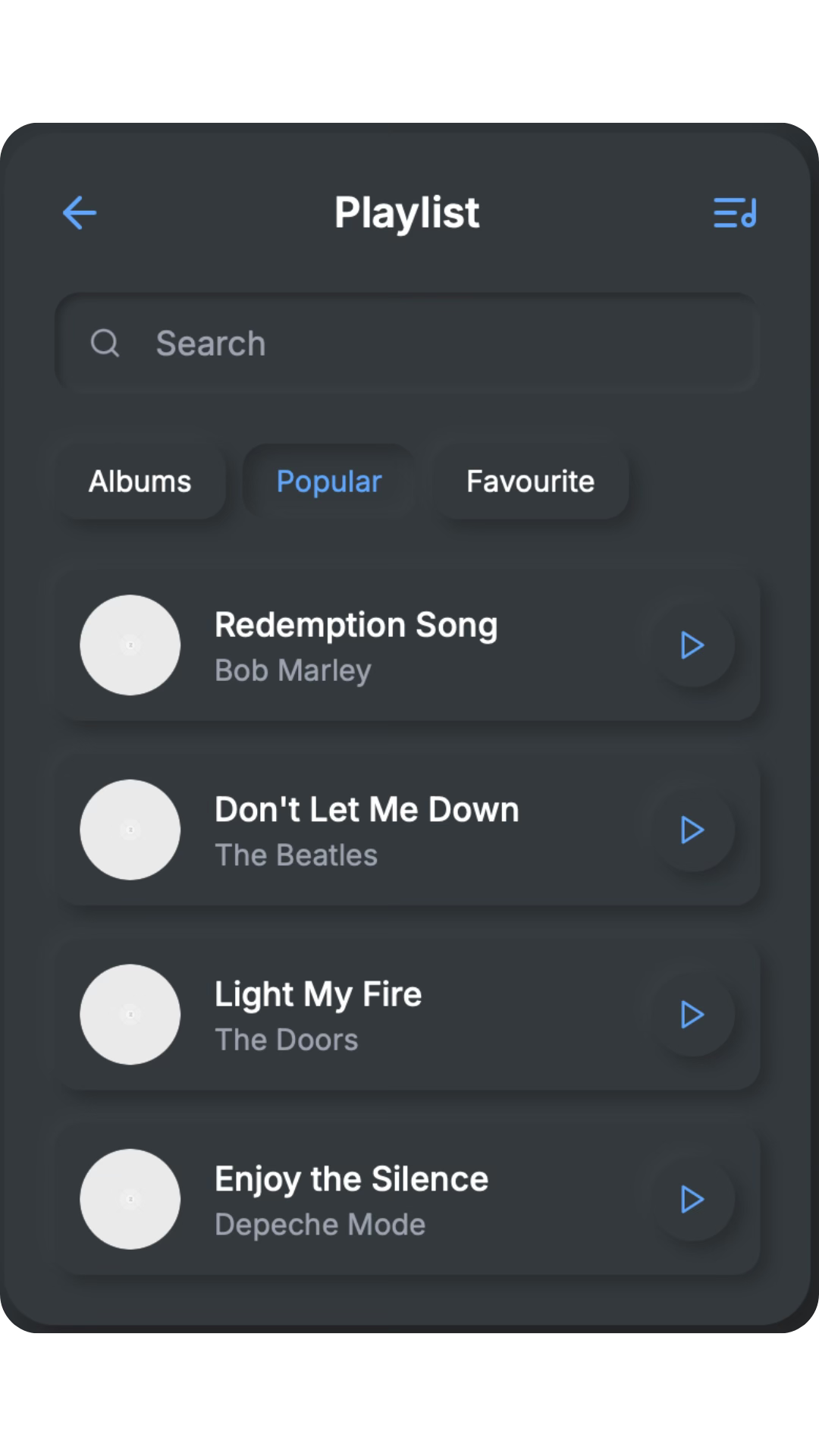

We shaped the music player in a soft-edged, neumorphic shell and deployed it quietly to Vercel. It has a sense of physicality. Soft, touchable edges. Just something you’d want to press, even though it’s digital. The UI stays minimal, with just enough feedback to feel alive. No flashy transitions.

Right now, it’s separate from the chatbot, but everything points toward convergence. The idea is for design and conversation to eventually meet in one space.

You communicate, it listens. Then it acts. You can queue songs, skip, shuffle, turn it up — all through natural language. No keyword memorising, no command syntax, no visual overload. The UI responds immediately.

Our Collective

We’re a collective of people who build with language and interaction — writers, designers, developers, researchers, and people who blur those lines.

The goal isn’t to chase hype or crank out demos. It’s to create things that actually work. Tools that feel alive. Interfaces that listen and do something useful.

This Spotify Agent is our first working piece. Not the biggest. Just the first to fly off 🎈.

Built by two brothers — Michele and Claudio Romano — as the first of many Twelve Balloons experiments.